Network Transmission

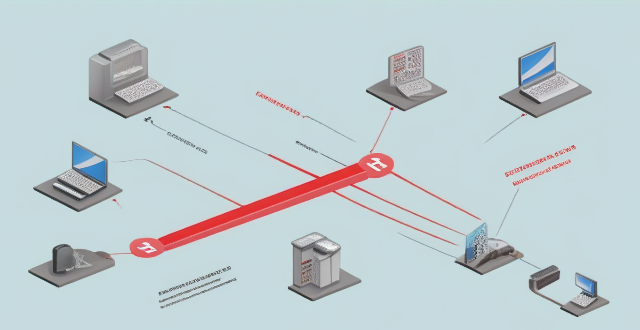

Is network slicing secure for sensitive data transmission ?

Network slicing is a technology that allows multiple virtual networks to coexist on a shared physical infrastructure, enabling service providers to offer customized services with different QoS requirements. While network slicing offers numerous benefits such as customization, resource allocation, scalability, and isolation, there are also potential security concerns that need to be addressed. These include data isolation, access control, encryption, and intrusion detection and prevention systems (IDPS). By implementing robust isolation mechanisms, access control policies, strong encryption algorithms, and effective IDPS, service providers can leverage network slicing while maintaining the security of sensitive data transmission.

How does network expansion improve internet speed ?

Network expansion enhances internet speeds by reducing congestion, shortening transmission distances, increasing bandwidth, improving redundancy, and allowing for scalability. This process involves adding more nodes to the network, such as routers and switches, which improve data transmission efficiency. By distributing traffic across multiple routes and upgrading infrastructure, internet service providers can meet increasing demand for high-speed connections while maintaining fast and reliable service.

What are the most common types of network connectivity devices ?

This text discusses the various types of network connectivity devices, including routers, switches, modems, and wireless access points. Routers are used to forward data packets between computer networks, while switches are used to connect devices within a network. Modems convert digital data to analog signals for transmission over communication channels, and wireless access points allow wireless devices to connect to a wired network. The features of each device are also discussed, such as routing decisions, data transmission, security, and error detection and correction.

How do communication protocols ensure data integrity and security during transmission ?

The text discusses the role of communication protocols in ensuring data integrity and security during transmission. It outlines mechanisms such as checksums, sequence numbers, acknowledgments for maintaining data integrity, and encryption, authentication, and secure protocols for ensuring data security. The article emphasizes that adhering to these rules allows devices to communicate reliably and securely over networks.

How do compression algorithms contribute to network optimization ?

Compression algorithms are crucial for network optimization by reducing data transmission, thus improving speed, bandwidth consumption, and network performance. They also enhance security and disaster recovery capabilities.

Can network expansion solve issues related to network congestion ?

## Topic Summary: Network Expansion as a Solution to Network Congestion Network congestion is a common problem that affects the performance of networks, leading to delays and reduced efficiency. One potential solution to this issue is network expansion, which involves increasing the capacity of the existing infrastructure by adding more hardware or upgrading existing equipment. This approach can alleviate network congestion by providing additional bandwidth for data transmission, improving overall performance, and reducing latency. However, network expansion also has its drawbacks, including high costs and the need for careful planning and implementation. Additionally, addressing the underlying causes of congestion is crucial for long-term success.

What is the role of encryption in securing data transmission ?

Encryption is crucial for securing data transmission by converting plain text into unreadable ciphertext, ensuring confidentiality, integrity, and authentication. It protects sensitive information, prevents data tampering, enhances trust, complies with regulations, and reduces the risk of data breaches. Two main types of encryption are symmetric and asymmetric encryption, each using different keys for encryption and decryption.

How do communication protocols manage errors and congestion in a network ?

Communication protocols play a crucial role in managing errors and congestion in a network. They use various techniques such as checksums, acknowledgments, timers, traffic shaping, congestion control, and error recovery mechanisms to ensure reliable and efficient data transmission between devices on a network.

How does network congestion impact latency ?

The impact of network congestion on latency can be significant and can have a negative effect on the overall performance of the network. This can include increased transmission time, higher drop rates, reduced bandwidth availability, and impacts on application performance. It is important for network administrators to monitor and manage network traffic to minimize the impact of congestion on latency and ensure that applications continue to function properly.

What is the role of a network hub in a computer network ?

In this text, the role of a network hub in a computer network is discussed. The main functions of a network hub are data transmission, connectivity, and collision domain management. However, the device also has limitations such as bandwidth sharing, security risks, and scalability issues. Despite its importance in connecting devices and allowing resource sharing, more advanced networking devices are often used in larger and more complex networks to overcome these limitations.

How does network expansion affect the overall network performance ?

Network expansion can significantly impact overall performance, offering benefits such as increased bandwidth, improved redundancy, and enhanced connectivity. However, challenges like compatibility issues, security concerns, and complexity management must be addressed to maintain optimal performance. Careful planning is crucial for successful network expansion.

How does network congestion affect internet speed and how can it be managed ?

Network congestion slows down internet speed by causing delays, packet loss, and reduced throughput. Effective management strategies such as traffic shaping, load balancing, caching, QoS settings, infrastructure upgrades, CDNs, and congestion control algorithms can mitigate these issues and improve overall network performance.

What are the benefits of using a powerline adapter for home network connectivity ?

Powerline adapters offer a simple and effective way to enhance home network connectivity by using existing electrical wiring. They provide benefits such as ease of installation, stable connections, extended coverage, high performance, and cost-effectiveness.

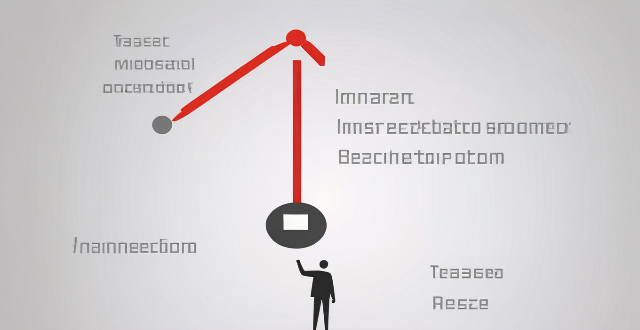

What causes network latency ?

Network latency is a critical metric in networking, referring to the delay that data experiences when traveling between two points in a network. Understanding the causes of network latency is essential for optimizing productivity, collaboration, and user experience in today's digitally reliant world. The article delves into the various factors contributing to network latency and why it matters.

What are the benefits of using a virtual private network (VPN) for network security protection ?

The text discusses the benefits of using a Virtual Private Network (VPN) for network security protection. It highlights seven key advantages: 1. **Encryption and Secure Data Transmission**: VPNs encrypt internet traffic, securing data transmission, especially on public Wi-Fi networks. 2. **Anonymity and Privacy**: By routing connections through remote servers, VPNs mask IP addresses and physical locations, enhancing online privacy. 3. **Access to Geo-Restricted Content**: VPNs enable users to bypass geographical restrictions, accessing blocked or restricted content. 4. **Protection Against Bandwidth Throttling**: VPNs can prevent ISPs from managing certain types of traffic by encrypting it. 5. **Enhanced Security on Public Networks**: Using a VPN on public networks adds an extra security layer against potential hackers. 6. **Remote Access to Work Networks**: For businesses, VPNs provide secure remote access to company resources. 7. **Avoid Censorship**: In regions with internet censorship, VPNs can help users access an unrestricted internet. The note emphasizes choosing a reputable VPN provider and practicing good cybersecurity habits for optimal protection.

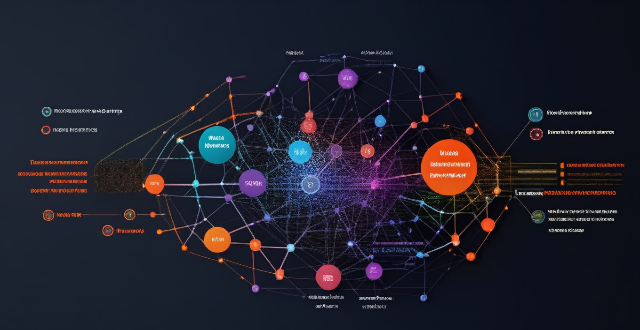

What are the latest techniques in network optimization ?

The article discusses the latest techniques in network optimization, which include software-defined networking (SDN), network function virtualization (NFV), machine learning and artificial intelligence (AI), edge computing, and multipath transmission control protocol (MPTCP). SDN separates the control plane from the data plane, allowing for centralized management and control of network devices. NFV replaces traditional hardware-based network functions with virtualized versions running on standard servers. Machine learning and AI enable networks to automatically detect and respond to changes in traffic patterns, optimizing performance without manual intervention. Edge computing brings computational resources closer to the end users or devices, reducing latency and improving overall network performance. MPTCP allows multiple paths between two endpoints to be used simultaneously, reducing congestion and improving reliability. These techniques ensure that networks are efficient, reliable, and capable of handling increasing amounts of data.

What is considered high network latency ?

High network latency is a delay in data transmission that can negatively affect the performance of applications and services. It is influenced by various factors such as distance, congestion, hardware performance, bandwidth limitations, QoS settings, and interference. The definition of high latency varies depending on the context, but it is generally considered to be any delay that significantly impacts the usability of applications or services. Identifying high network latency can be done using tools like ping tests or traceroute commands. Mitigating high network latency can involve upgrading hardware, increasing bandwidth, optimizing QoS settings, reducing physical distance, and minimizing interference.

What is network latency and how does it impact user experience ?

Network latency is the delay in data transmission over a network, influenced by factors such as distance, congestion, and hardware limitations. It negatively impacts user experience in online gaming, video conferencing, streaming services, web browsing, and online shopping, leading to frustration and reduced engagement. Reducing latency through optimized network infrastructure can enhance user satisfaction.

How does network latency affect online gaming ?

Network latency, or "lag," is the delay in data transmission between a player's device and the gaming server. This delay can significantly impact online gaming by affecting gameplay smoothness, multiplayer interaction, game design, and user experience. High latency can cause input delay, movement jitter, synchronization issues, communication delays, and disconnections, making games frustrating and unplayable. In contrast, low latency offers responsive controls, smooth movement, fair play, effective communication, and an immersive experience. Game developers use optimization strategies like client-side prediction and server-side interpolation to minimize latency's effects. Managing network latency is crucial for maintaining a high-quality online gaming environment.

How can I reduce network latency in my home ?

To reduce network latency in your home, check your internet speed, upgrade your router, use wired connections, optimize router settings, limit bandwidth hogs, place your router strategically, use a Wi-Fi extender or mesh network, and close unused applications and tabs.

What industries will benefit the most from network slicing capabilities ?

The article discusses the concept of network slicing, a technology derived from software-defined networking (SDN) and network function virtualization (NFV), which allows the partitioning of physical networks into multiple virtual networks to optimize resource allocation according to specific service requirements. It outlines the key benefits and applications of network slicing in various sectors such as automotive, healthcare, manufacturing, energy, financial services, and entertainment and media. The conclusion highlights the potential of network slicing to revolutionize communication systems and enhance service delivery, operational efficiency, and user experience across different industries.

Why does my network latency fluctuate throughout the day ?

The article explores various reasons for fluctuations in network latency, including network congestion due to high traffic volume, large file transfers, and server load; physical distance and infrastructure issues related to geographical location, network hardware, and ISP differences; and local network conditions such as wireless interference, multiple devices sharing bandwidth, and malware or viruses affecting performance. It suggests ways to minimize latency fluctuations, like upgrading equipment, optimizing Wi-Fi setup, scheduling large downloads during off-peak hours, using wired connections, and scanning for malware.

What is 5G network and how does it work ?

The 5G network is the fifth generation of mobile networks, offering significant improvements in speed, capacity, and responsiveness over its predecessor, 4G. It utilizes higher frequencies, advanced antenna technology, and reduced latency to provide enhanced mobile broadband, lower latency, increased reliability, massive IoT connectivity, and improved energy efficiency. The rollout of 5G worldwide is expected to enable new applications and services that were not possible with previous network technologies.

How do communication satellites enable real-time data transmission and monitoring ?

Communication satellites play a crucial role in enabling real-time data transmission and monitoring by serving as relay stations in space that can receive signals from one location on Earth and transmit them to another location. This is achieved through a complex system of technology, infrastructure, and protocols. Satellites are positioned in orbits around the Earth, either in geostationary orbit (GEO) or lower Earth orbit (LEO). Geostationary satellites remain fixed over a specific point on the Earth's surface, while LEO satellites move relative to the Earth's surface. The process begins when a signal, such as a phone call, internet data, or video feed, is generated at a source location. The signal is then sent via a ground station, which has powerful transmitters and antennas, up to the communication satellite using radio waves. Once the satellite receives the signal, it amplifies and frequencies it to avoid interference with other signals. The amplified signal is then transmitted back down to Earth, where another ground station receives it. Finally, the received signal is distributed to its intended destination, such as a phone network, the internet, or a monitoring station. Real-time monitoring is facilitated by the speed at which data can travel via satellite. With modern technology, latency (the time delay in signal transmission) can be minimized, especially with LEO satellites due to their closer proximity to Earth. Satellites can also be networked to provide redundancy and increased bandwidth for large-scale monitoring systems. Key technologies and infrastructure include ground stations, satellite design, network protocols, and satellite constellations. However, there are challenges and considerations such as weather impact, geographical constraints, and regulatory issues. In summary, communication satellites enable real-time data transmission and monitoring by acting as high-altitude relay stations, utilizing advanced technologies and infrastructure to deliver signals across vast distances with minimal delay.

Can upgrading my internet package reduce network latency ?

The text discusses the topic of network latency and whether upgrading an internet package can reduce it. It outlines various factors affecting network latency, including ISP infrastructure, type of connection, location, and network devices. The text then explores different upgrade scenarios, such as moving from DSL to fiber optic or increasing bandwidth, and their potential impact on reducing latency. It concludes that while upgrading can potentially reduce latency, the specifics of each situation should be considered before deciding to upgrade.

What is the difference between a router and a modem in network connectivity ?

The text delineates the differences between a router and a modem, highlighting their distinct roles within a network. A modem primarily converts digital signals to analog for transmission over telephone lines or cables, while a router creates a local area network (LAN) that enables multiple devices to connect and communicate with each other and the internet. Combination devices that integrate both functionalities are also discussed, noting their convenience but potential lack of advanced features compared to separate units. Understanding these differences is crucial for setting up and maintaining a reliable internet connection.

What is the difference between TCP and UDP protocols ?

TCP and UDP are transport layer protocols in the TCP/IP suite with distinct characteristics. TCP is connection-oriented, reliable, has a larger header overhead, lower transmission efficiency, used for applications needing reliability like FTP and HTTP. UDP is connectionless, unreliable, has smaller header overhead, higher transmission efficiency, used for real-time applications tolerant to data loss like video streaming and online games.

How does network slicing differ from traditional network management techniques ?

Network slicing, enabled by SDN and NFV, allows creating multiple virtual networks on a common infrastructure for tailored services like IoT and automotive systems. It offers dynamic resource allocation, scalability, better security, and can simplify management through automation. In contrast, traditional network management is monolithic with static resources, complex and potentially less secure. Network slicing is a more adaptable solution for diverse and growing connectivity needs.